SEO Science Snake Oil vs Solid Creative SEO

Bah Humbug to #SEOScience. It is no great mystery that these #CreativeSEO bets will pay off.

Bah Humbug to #SEOScience. It is no great mystery that these #CreativeSEO bets will pay off.

There is a habit pervading the SEO industry to test all the anecdotal minutiae, rumors from Google, and random “insights” from discussions with Google spokespeople. Findings get reported under the auspices of #SEOScience, but amount to little more than some impassioned SEO tail chasing. Upon which all the mountebanks show up to compare their brand of “Snake Oil.”

The problem with trying to “prove” SEO axioms is that doing so generates spammy experiments that don’t really tell us anything new and tend to just confirm, unscientifically, what we already suspect. Moreover, it tells us nothing about what kinds of SEO work will stand the test of time. It is common sense that our tiny corner of the internet is not a good representation of what is actually going on online — you know, on the actual internet.

Nonetheless, that doesn’t stop us talking about what works, even if they’re just anecdotes. And most SEO science experiments are closer to lay science — which, as Michael Martinez writes, also has its benefits.

My creative SEO thesis is that SEO science is completely missing the point by testing the wrong things and sharing results about its circular findings. I agree with Martinez that real SEO science would share data. If you’re not sharing data, it’s all just hearsay. But shared data is actually what’s missing from SEO science more than anything – and it’s continual absence leaves an entire industry open to the charge of “selling Snake Oil.” And that is plain unfair on all of us.

Even if you think that most SEO science is engaged in pointless posturing like I do (because apparently you only need to tell a more sensational SEO story than the Google Guidelines to earn the title SEO rockstar/data prophet/marketing ninja/social media guru), we can still generate value for our readers by laying down the mantle of science and reporting the anecdotes of our experiments more honestly: as anecdotes.

I’ve crafted this post as proof. This is what my own data says. This is some of what I know about SEO right now. And it’s all just anecdotes.

Below are a series of obvious creative SEO bets that are all aligned with what’s written in Google’s Guidelines. Each is accompanied by an example of the kind of measurable results you could generate. I’ve written a companion post to this one and I’m deliberately using hashtags in this post to mark both sides of the debate, and I hope you will join in this important industry discussion on Twitter. If we widen our debate to include hashtags, we can empower all voices in the industry and diffuse obstructive partisanship.

My hope is that the highlights below will embolden you to bet on the “unproven” and anecdotal effectiveness of #creativeSEO: a nuanced way of thinking that follows the spirit of the Google Guidelines.

Google says, “Make sure that your title elements and ALT attributes are descriptive and accurate.”

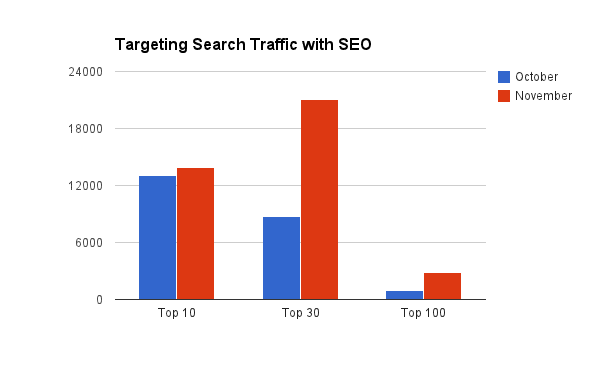

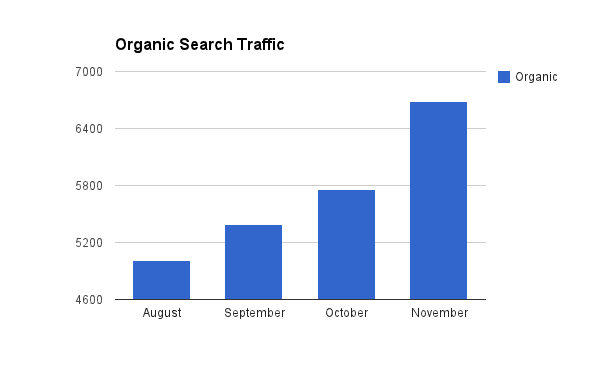

Changing the Title tag can yield a ranking benefit in just one month.

The only mystery here is to guess what keywords an information seeker might use.

It will also cause a rank shift in the back of the index.

It works because you just did what the Google Guidelines told you to do.

Which also delivers an indexing benefit.

Are you getting the idea now?

And a search traffic benefit.

It really does. Cool, huh?

But it still might take a seasonal dip.

You can’t win ‘em all, buddy!

Google says, “Create a useful, information-rich site, and write pages that clearly and accurately describe your content.”

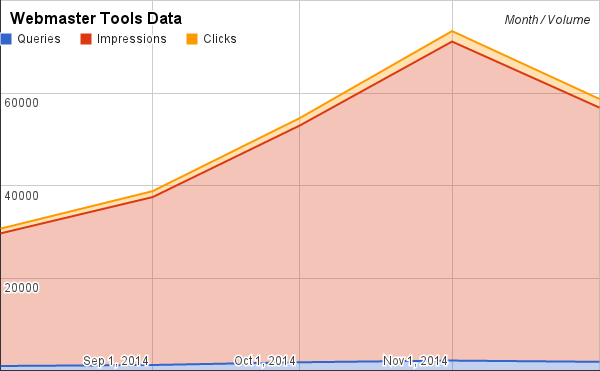

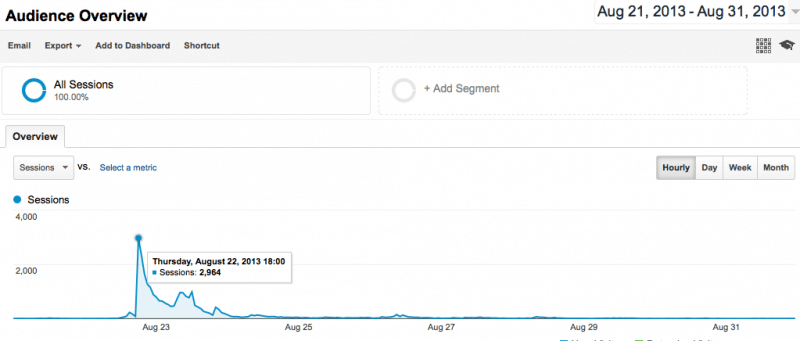

Fresh content can deliver huge instant wins.

One single initiative generated as much traffic and as many links to the site as the entire site had garnered in an entire year. Yet, that it happened at all was a mixture of luck and experience. It was simply a great gamble.

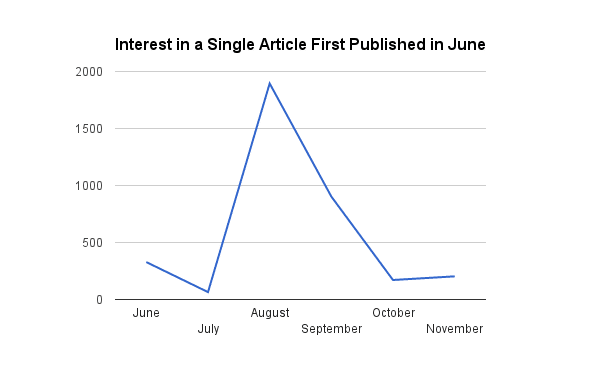

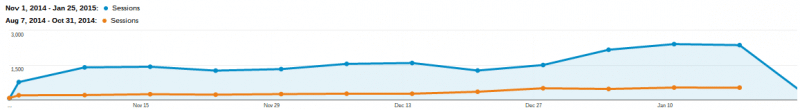

But a single story can reap rewards much later than expected.

Sometimes, the work you do will pay off later. Just because it didn’t work the first time around doesn’t mean you can’t revisit the strategy and creatively rethink your approach.

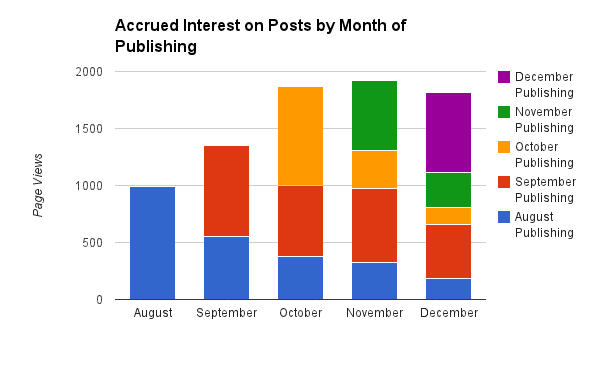

And your archive can be optimized too.

Your archive is one of your greatest assets. Every original piece of content on your site is like putting money in the bank that earns interest over time. It doesn’t take a genius to do the math and turn this insight into a long-term growth strategy. It just takes some lateral thinking.

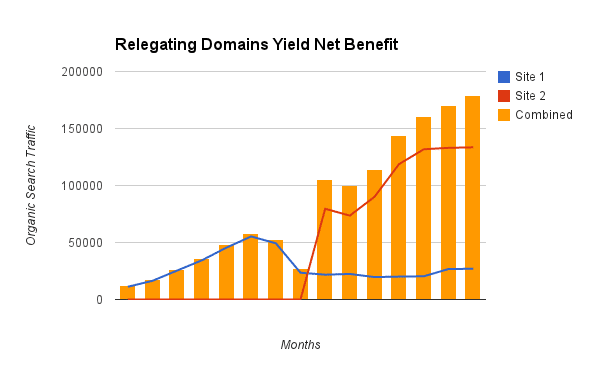

Untangling complicated websites can boost search traffic.

At the risk of boosting one domain while sacrificing another, consolidating domains can yield a net positive result. But changing the current structure of a website always entails the risk that some unknown ranking factor gets lost and topples the whole website from the top of the search results.

#SEOscience could cogitate for ages on the problem of reconciling multiple domains for a single business, but the discussion will be theoretical at best when, at the end of the day, someone has to do it to a deadline.

The risk has to be taken one way or another. So use your methodologies (and the Google Guidelines) in order to structure your work so you can untangle or catch a mistake before it happens. And again, it’s not science. If anything, such a methodical approach is part of the #creativeSEO process.

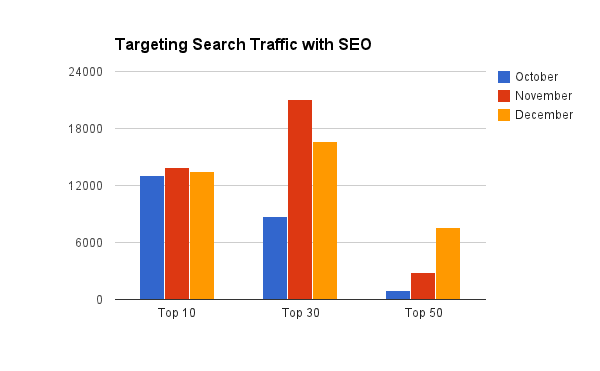

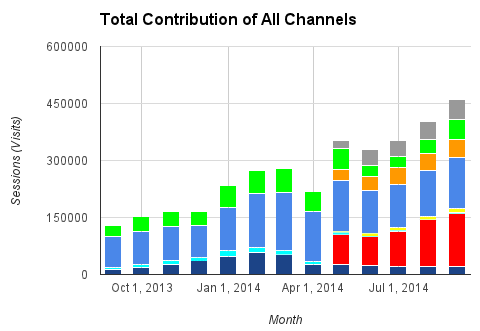

Migrating your blog to your core domain probably will boost search traffic:

Both your blog and site can generate traffic independently, but simply moving your blog content on a subdomain to a folder on the core domain can yield a search boost to all of your content. If there is any single SEO strategy that we could get scientific about, it’s probably this one. The effect of changing this one variable in the correct manner (i.e. with the right redirects and migration strategy) is one of the few things we could actually agree upon and easily share data about.

Google says, “Think about the words users would type to find your pages, and make sure that your site actually includes those words within it.”

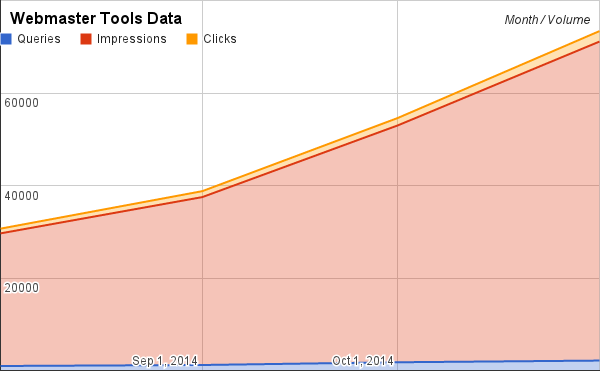

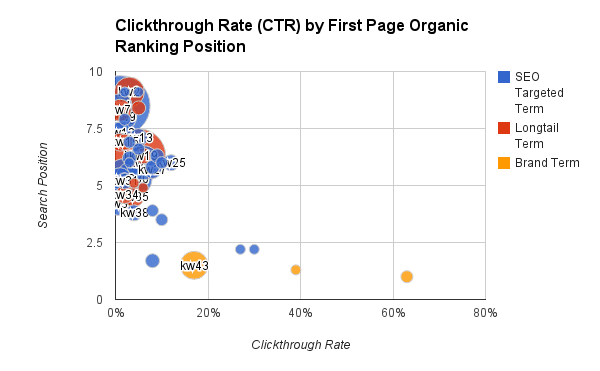

Click-through rate is mostly a factor of position.

If you have Adwords experience, you probably know this already. But it also holds true for SEO. Clickthrough rate (CTR) is a stronger indicator of how people use search engine interfaces than of how relevant your website is in the search results.

With so many keyword term and phrase adjustments for every intended search query, it’s almost absurd to associate the idea of absolute relevance to any single website. That doesn’t mean clickthrough rate is a useless metric, but it does mean it can only indicate so much — and what it will nearly always indicate is that being higher on the page is the most important place to be.

So, forget ‘ranking factors’ and just incorporate your understanding of that search user behavior into your SEO strategy.

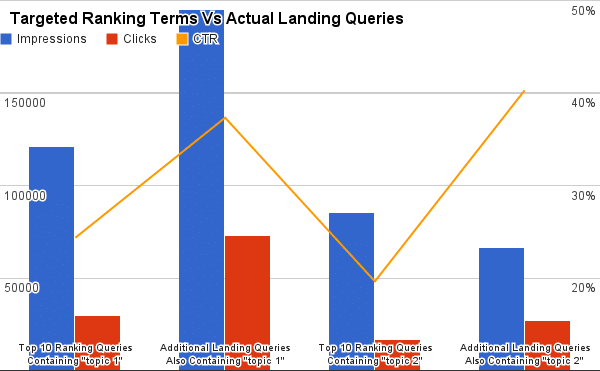

Topic targeting may be as important as keyword rankings but no extra secret sauce is required.

The very latest secret sauce advice for SEO in 2015 makes a big deal of the Knowledge Graph and over-eggs the claim that everything is about “entities” or “topics.” There would be nothing wrong with this advice except that most of the cases made for entity optimization are made under the auspice of improving rankings on specific terms.

Which is the wrong way of thinking about the problem that Google is trying to solve.

The entire entity-based data set, namely Knowledge Graph, is designed for Google to cater to those classes of search queries that cannot be encapsulated in one single keyword expression of the idea. Ontological connections are deliberately disparate and vague so Google can offer the user a best guess at what might be relevant to the user and should be. You could equally imagine every sighting of a Knowledge Graph result as Google shrugging and saying, “I’m not sure whether you want to know more or less on this topic,” and then deriving the intention of the query (say, whether it is informational rather than a transactional query) from the ontologically related links the user clicks next.

Therefore, any theory on entity optimization that purports to go beyond schema/markup is basically table stakes for anyone who has a grounding in the basic SEO principle of targeting search traffic. It’s no big whoop.

Google says, “Make pages primarily for users, not for search engines.”

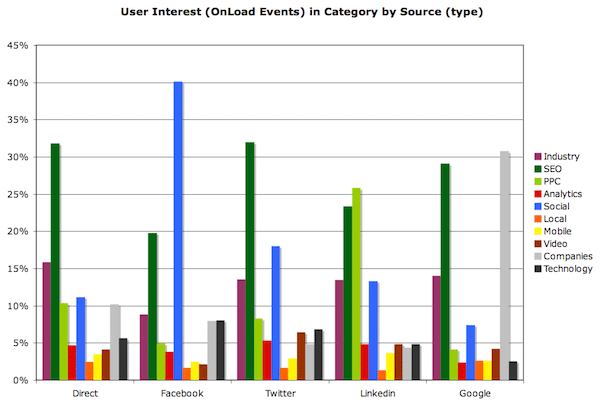

A lot of your best SEO decisions can come from just trying to get better analytics.

When you really design for the user, you have to design useful tools for yourself. Or put another way, you can’t design for the user without real user data. The graph below was created to tell the webmaster which content categories different audiences were most interested in.

As you can see in this case, all audiences are consistently interested in SEO which is good as this is data from an SEO industry publication. Equally, it is fairly unsurprising that all the visitors from social networks were most interested in social media stories. Also, that visitors from Google Search were most interested in companies was not a huge surprise either because the content in that category was essentially all news and optimized for company names.

However, there is one big surprise: audiences that arrived from Linkedin were disproportionately interested in PPC, which highlights an opportunity to engage them in a more specific and strategic way.

With the data set up in this way, one could start to try some little experiments to change the pattern. But the primary skill required to get to this point is just learning about how to set up your analytics correctly (sorry, no secret sauce required).

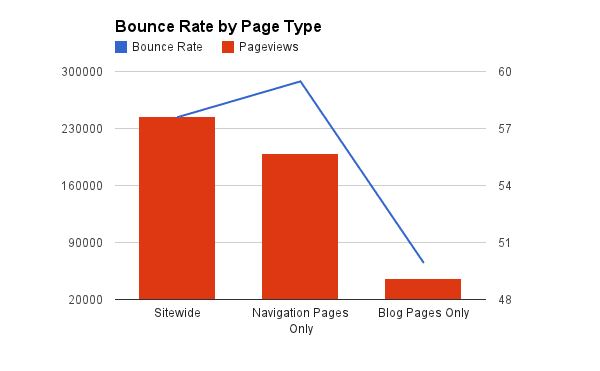

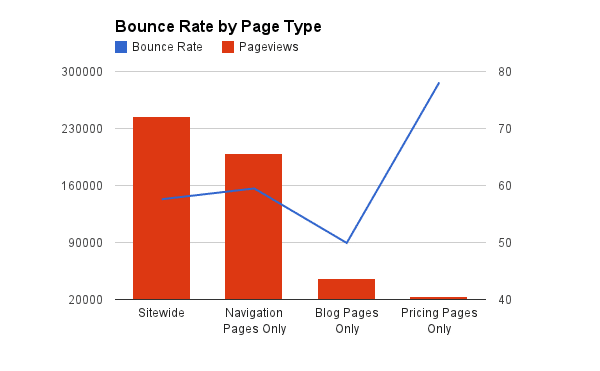

Bounce rate is adjustable (and pretty meaningless in aggregate).

The graph above just maps onload events in Google Analytics to specific content categories. And just this process of adding the onload events cut the average bounce rate to a fraction of what it was, simply because we’re now dictating that every page load is deliberate.

Business owners and marketers continually misread bounce rates because the measurement itself assumes a lack of user interaction. To make this metric useful you need to tweak your analytics setup and deliberately ascribe some kind of purpose to pages and events on your site (the irony here is that you have to make the events/actions that are almost too trivial and too obvious to measure be the ones that count most). Otherwise, you’re just measuring what you already know, which is that most users will bounce (and no one needs to prove that).

But for the sake of a golden rule, I’ll advise that you “stop watching bounce rates at an entire site level.” In general, your “website bounce rate” really doesn’t matter unless you’re looking at a specific page. If you insist on worrying about bounce rates, then consider them only as part of an analysis of individual pages or segmented sets of pages on your website.

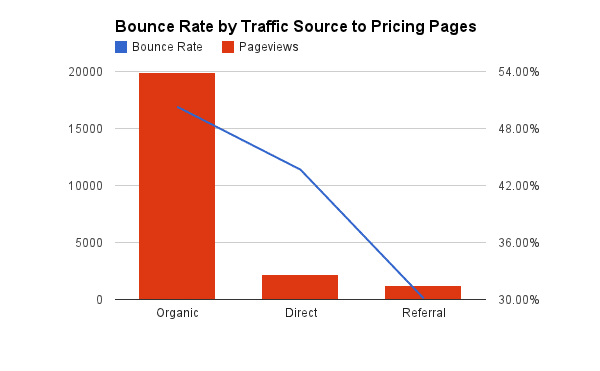

And bounce rates increase with intentionality of the page.

This just means that people are more likely to bounce from the page the less relevant the page is to their current information needs. This is true regardless of where the page originally ranked in the search result pages. But that behavior alone points to the inherent difficulty for any SEO to claim to understand how relevance really is at play beyond the “luck of the draw.”

And bounce rates increase with intentionality of the medium.

So if they didn’t come from search engines, they probably shouldn’t be included in your SEO analysis — or your overall marketing analysis for that matter. You have to segment your audience if you want the bounce rate metric in Google Analytics to tell you anything worthwhile at all.

[IMAGE MISSING: This graph shows how segmenting bounce rate on all pages by source suggests that visitors from search engines and social networks display markedly different behavior.]

So can we stop advising people to worry about bounce rates as a ranking factor? Bouncing users are inevitable and whatever it signifies to Google will be benchmarked on a completely different scale — such as with the page layout update — that takes the entire design of the internet into account (which probably found that sites cluttered with ads caused more users to bounce at a search level).

So, clicking the back button is just a fact of life.

To drive the point home once and for all, bounce rate is just a measurement, not a signal. It can only identify behavior that already exists. It is a useful indicator, but not a useful predictor of your ultimate SEO objectives. Sure, measured correctly, bounce rates can be effectively evaluate your business objectives and help you design better user experiences — which we know can ultimately yield a benefit on search engines. But to focus solely on bounce rates is to fixate on the finger when it’s clearly pointing to the moon.

Buzz Lightyear says, “Power Laws, Power Laws Everywhere”

In my opinion, nearly all the things we can fairly test in SEO will nearly always produce a power law distribution. Everything about Google Search and how it presents the entire question of relevancy has to lead to this kind of “winner takes all” result.

From our own experience, we all know whether something is relevant or not. But what our own experience can never tell us is just how fragile the concept of relevance is from moment to moment at “Google Scale.” We can’t even count how many times the question of what’s relevant comes up for ourselves in day-to-day life, let alone in the eyes of billions of users.

As we are truly unable to infer the inner workings of the information seeker input (the keyword queries), we can only make assumptions about it based on their output (the ten blue links they click). Which means, in general, we’re only testing the design of a search interface and have gotten no closer to defining the descriptor we tout as “relevance.”

Ultimately, for Google, once you move beyond the backstory of PageRank, there really is no “algorithm” without clicks. It’s just a giant sample testing machine. It’s a weather vane that points to what might be relevant, yet once the results are displayed it’s down to the user to choose. Search engines and social networks might yearn for the day when the “ten blue links” design we see in search results pages are a thing of the past, but that day will only come if the new user interface proves to be the one most universally adopted by users.

Search engines are currently designed in such a way that there can be no clicks without lists. And anything displayed as a list implies an order or set of priorities — even in an unordered list. That habit for introjecting an implied order is automatic and caused by the simple fact of how we read. We read lists from top to bottom, which incites certain behaviors that have nothing to do with relevance.

That such behavior massively influences any concept of relevance should not be perpetually discounted!

In fact, I would argue it is just about the only thing the SEO industry can sensibly test without actually being Google.

This article was originally published in State of Digital on 28th January, 2015.